SenseCam: An FAQ About My Personal Experiences Wearing One

I have written a little about the SenseCam and my experiences with it, but there are still many questions people ask, so I thought I would attempt to answer some of the more common ones here.

What is a SenseCam?

A SenseCam is a gadget that, at the very least, captures images of people and places at regular intervals or when the camera determines something "interesting" is taking place in front of the lens.

Unlike a regular camera that the user interacts with by holding it up to the subject to be photographed and then depressing a button, the SenseCam automatically snaps pictures as and when it decides.

A SenseCam is worn on a lanyard around the neck, hanging from a belt loop or an arm band. By taking regular images throughout the day, as the wearer of the SenseCam goes about their regular daily life, a visual record is built up on places the wearer has visited, people they interacted with and activities that were engaged in.

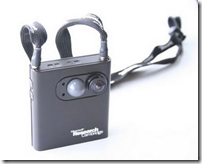

These are pictures of SenseCam devices created by Microsoft.

And if you are curious about my particular SenseCam, it is this:

Which is a just a plain vanilla, SONY Ericsson k850i with a custom SenseCam application running on it. After all, “software maketh the machine” which is a concept I have been preaching for the past 30 years.

Which is a just a plain vanilla, SONY Ericsson k850i with a custom SenseCam application running on it. After all, “software maketh the machine” which is a concept I have been preaching for the past 30 years.

Who wears a SenseCam?

Lifeloggers/lifebloggers/lifegloggers, technology mavericks, Alzheimer patients or anybody interested in capturing the significant and not so significant events in their life.

Lifeloggers/lifebloggers/lifegloggers, technology mavericks, Alzheimer patients or anybody interested in capturing the significant and not so significant events in their life.

There have been some notable technology people that wear a SenseCam or SenseCam-like device, such as Steve Mann and Gordon Bell.

Why do you wear a SenseCam?

I developed and began wearing a SenseCam-like device with the notion of recording as much of the activity and decisions taking place within my software start-up company as is possible.

In the intervening time, wearing a SenseCam has taken on different aspects and I now also use it to remember significant events within my life such as a Christmas party I attended, dinner with friends, or other important times. I hope that one day, all of the data I am collecting, will be able to be processed, and perhaps provide an interesting case study of a human life.

Though the community is small, more and more people are beginning to show interest in SenseCam-like devices for recording their lives, events, and also as an evidence gathering technique.

Even before the term SenseCam was coined, before computers and electronics could be miniaturised sufficiently, people, such as Buckminster Fuller, gathered copious amounts of data about their own lives, leaving nothing out in case it was significant.

What do SenseCam images look like?

SenseCam images look like just regular photographs taken with a digital camera. Sometimes they are blurry, especially when the image was taken under electric lighting conditions with a lot of movement by the wearer, many times the pictures are crystal clear.

Below are some examples of SenseCam images that I have taken around the Los Angeles and San Francisco area.

I am currently experimenting with making use of the accelerometers in the cell phone to counteract the excessively blurry images captured under electric lights. This works by taking the picture the instant that the accelerometer indicates that certain types of movement that enhance the blur effect have ceased long enough to capture an adequate blur-free image.

How many images does the SenseCam take?

That depends on the activity level of the scene and also how much I am moving. The cell phone I use that runs the SenseCam software has accelerometers in it, so the software can determine how far I have moved, and take a picture if it is a significant distance, which at this time is set at 3 metres.

The SenseCam will also take a picture if it recognises a human face in the scene, though this feature is not particularly robust at this time due to the constraints I have placed on the software. These constraints are imposed by considerations of power consumption rather than computing power.

Pictures are also captured if there is a significant change in light levels within the environment, usually indicating a move from one location to another.

And finally, the SenseCam will capture an image at regular time intervals, which I set to every 15 seconds, though this is user definable through a simple graphical user interface menu system.

Over the course of a day I will capture between 1,000 and 9,000 images depending on scene activity, events in my life, how long I am awake for, and also how long I wear the SenseCam during the day.

How long have you been wearing a SenseCam for?

I have been wearing my SenseCam for a little over two years. In that time I have captured approximately 500GB of data totalling approximately 1,300,000 images and a little over 10,000 hours of audio.

Do you always wear your SenseCam?

At this time, no. There are many days when I do not wear the SenseCam at all, simply because nothing exciting is going to happen when I am walking at my treadmill desk, with the door closed, writing out articles like this.

At this time, no. There are many days when I do not wear the SenseCam at all, simply because nothing exciting is going to happen when I am walking at my treadmill desk, with the door closed, writing out articles like this.

I wear the SenseCam only occasionally on mundane days, it is when I leave my home or office that the possibility of exciting things happening around me rises. I have enough commonplace pictures of me drinking coffee, cleaning my bicycle, eating lunch, or writing code that I do not feel a need to capture any more. (Maybe not so many pictures of me cleaning my bicycle.)

Having said that, I might not be wearing my SenseCam and taking pictures all the time, but 24 hours a day, the SenseCam is recording audio and geo-location data as I go through life.

What resolution do you capture images at?

I have a cell phone with a five mega-pixel camera so I capture at that resolution normally, with about a 60% JPG compression setting. I find this gives good quality photos without overly taxing the available memory space.

Capturing at higher or lower resolutions, or with more or less JPEG compression as far as I can determine has a negligible effect on battery life so 60% compression is really just for the convenience of storage space.

What lens do you use?

I make use of two lenses on my SenseCam. The built-in lens of the cell phone, which is not particularly good at capturing a lot of the scene in front of me, and also a cheap, magnetic mount wide-angle lens I purchased at Fry’s Electronics that captures a larger area.

I make use of two lenses on my SenseCam. The built-in lens of the cell phone, which is not particularly good at capturing a lot of the scene in front of me, and also a cheap, magnetic mount wide-angle lens I purchased at Fry’s Electronics that captures a larger area.

The wide-angle lens is a little heavier and more obtrusive when it is pointing at people, so I usually only make use of it when I am out and about, running errands.

How long does the battery last?

Depending on how much activity is taking place, the current SenseCam battery lasts anywhere between 6 hours and 18 hours. As the SenseCam is just a regular cell phone running some fancy software it is trivial to carry a spare battery, and quickly switch it out before it drains away too much. I can also charge the battery via USB, and I do just that when I am walking on my treadmill or driving around town.

What sensor data do you collect?

Apart from the images and audio, the SenseCam I wear collects geo-location information from cellular towers, light levels, acceleration and tilt. My SenseCam is a piece of software that runs on a high end cell phone, therefore I am only limited by the sensors built-in to it. I also capture GPS position from a GPS data logger.

I am not willing at this time to begin hardware hacking, until I have adequate software I feel it is worth expending the extra effort, time and money for.

How do you use the sensor data?

The SenseCam software uses the light levels to determine if someone has walked in front of the camera, or if the wearer has entered a new location.

The geo-location data is used to determine where the wearer is situated within the physical world which is used to tag images once I upload them to my workstation.

Tilt angle and acceleration I am not making full use of at this time, but intend to do so in the near future for auto-correcting for the angle that the SenseCam was at when the picture was taken.

What features does a regular SenseCam have over yours?

Microsoft’s SenseCam has a lot of research dollars thrown at it. Mine has just me and whatever time I can spare to cobble together the software. In terms of hardware, the only two features I can find mentioned in Microsoft’s SenseCam that I cannot replicate at this time is a built-in heart rate monitor and an infra-red sensor for detecting body heat.

There may be SenseCam models containing sensors other than the standard complement, a bit like unique Doctor Who Daleks modified for a specific mission, but I have yet to locate any information on them.

In terms of software, it appears that Microsoft’s Research Centre has created many different desktop applications for determining significant life events, replaying the daily record of images, etc. This is where I fall behind because I just do not have the man hours available to me to emulate all of the work they are doing.

What features does your SenseCam have over Microsoft’s?

Other than the fact that my SenseCam is a fully functional cell phone, the major hardware differences between my SenseCam and Microsoft’s is the amount of storage, the amount of computing power, the battery life, and the high resolution, 5MP (five mega-pixel) camera. Utilising a cell phone as a SenseCam I also get to use a super-bright LED light that can be enabled for low-level lighting conditions, an LCD screen to review images I have taken, and audio recording capability with audio playback for a quick review.

For software, I cannot compete with a team of programmers and researchers but I can learn from their work and create similar applications to them.

My on-phone SenseCam software has a "take picture now" button, a "quick delete" button, "suspend capture" button, "pause/resume" audio recording button, a pedometer, snapshot time logging reminder, gated time logging, and other features I have tinkered around with over the past two years.

Do you have any intention of releasing this software?

At this time I have no intention of releasing my SenseCam software. That is not to say that I will not. Just for now, no.

The software is incredibly easy to replicate and anybody interested in life logging with a SenseCam could create the basic SenseCam software in a matter of days.

One of the major reasons I am not prepared to release the software at this time is I am not willing to turn it in to a project that I have to support. The SenseCam software only runs on two cell phone models that I know of, the SONY Ericsson k790a and the SONY Ericsson k850i.

Along with that, the Python application, PySenseCam, is certainly not ready for prime time, all of the interface is programmer designed and thrown together, so just trying to use it and make sense of it would require a willingness to tinker and put up with untold bugs that, at this time, I am not willing to fix.

How can I build a SenseCam for my own use?

My recommendation if you want to quickly explore creating your own SenseCam is to get a quality cell phone with a good OS, such as Android, iPhone, or a high quality J2ME phone.

The SenseCam software that runs on the cell phone should not take more than a day or two to get up and running, and then you can tinker and add features. It is the desktop software that reviews those images and lets you tag them, manipulate them, and make sense of them is where most of your development effort will be spent.

The SenseCam software that runs on the cell phone should not take more than a day or two to get up and running, and then you can tinker and add features. It is the desktop software that reviews those images and lets you tag them, manipulate them, and make sense of them is where most of your development effort will be spent.

Many people, including the popular press, right now are focusing on the device, rather than thinking about the software. It is a little like worrying about the engine in a car and all the things it can do rather than what the car is and how it will change the user and society and how it will need to change to accommodate the user and our future needs. We are all concerned with "what" a SenseCam is about, rather than "how" a SenseCam is about.

Who developed this software?

The SenseCam software running on my SONY Ericsson k850i was developed by me, Justin Lloyd, in my spare time, whilst managing and running my video game software start-up, Infinite Monkey Factory.

There is also a companion application, called PySenseCam, that manipulates and wrangles the large quantities of collected data that does not just tie in to the SenseCam but also any audio I have recorded, GPS geo-location, websites I have visited and snapshots of my computer desktop that are taken at regular intervals. I am attempting in a way to make PySenseCam replicate the functionality of MyLifeBits or the Dymaxion Chronofile.

Who developed the original idea of the SenseCam?

The original name "SenseCam" was coined at Microsoft Research Centre in Cambridge, England, by, as far as I can tell, Lyndsay Williams, one of the original researchers on the project.

Gordon Bell has done a lot of work with the SenseCam too, but the original idea has been thought up multiple times by many people, with the earliest known reference being the "memex" by Vannevar Bush which dates back to the mid-1940’s

From early 2001 up to Thanksgiving 2006 I had been using a digital voice recorder and general purpose digital camera to log all of my conversations and take snapshots of interest for events or tasks I would need to remember later, such as purchasing a particular book I had just seen.

I thought I was coming up with something groundbreaking and original around Thanksgiving 2006 when I sketched out the idea in my notebook, after seeking and not finding an adequate commercial solution that would do what I want.

I began developing the idea further, looking for a simple device I could put to use, when Apple announced the iPhone in Q1 of 2007. Aha, the light goes off above my head, just what I want. And then SONY Ericsson announced the k790a, which was smaller, lighter and more practical with an operating system I was already familiar with.

I developed an application for the cell phone over the course of a couple of days and a desktop companion application to manipulate the data in the following weeks, which at that time was just called "snapshot prototype" that did most of what the main functions of the Microsoft Research SenseCam can do today.

A month or two after developing the software, in April or May of 2007, I spotted Gordon Bell on the cover of a magazine that was about five months old by that time, with an article talking about "his" SenseCam and I immediately thought "bugger, nothing new under the Sun after all." The magazine was published around the same time I was coming up with my concept for my own "SenseCam," though I did not call it by that name at the time.

The original article covering Gordon Bell’s experiences with a SenseCam is here and here. Interestingly, some of the points raised by the original article author I have also written about in the past with regard to how our perception of memory changes what it means to be human. What if you could forget anything you chose? What if you could remember so vividly an experience that you would swear it was real? What happens when every human thought has already been thought? I will transcribe those talks and articles in to a digital form, bring them up to date and post them here in the near future.

What do you do with all of the data you collect?

Other than pulling out significant events or discussions from the archives to create an article around, at this time, I do not do very much with the collected data.

Other than pulling out significant events or discussions from the archives to create an article around, at this time, I do not do very much with the collected data.

I grab images for use in my articles on this website, I manually transcribe notes I need to remember, but most of the data sits in an a directory on my server collecting the equivalent of digital dust.

The tools do not yet exist to adequately manipulate and wrangle the amount of data that a SenseCam collects. I am working on an application for my personal use that attempts to solve this problem. Unfortunately competent, general purpose machine vision and automated transcription of voice are still some years away from being good enough.

How do you manipulate and identify the images?

I make use of Windows Live Photo Gallery, and a small application I have created called PySenseCam, that copies images from the cell phone to a network hard drive, renames them, and sorts them according to date and time that the image was taken.

PySenseCam tags each image with geo-location data from the cell tower ID and also from available GPS data captured from a small GPS data logger.

How do you store and sort your data?

I store all of my data on a regular hard drive in JPG format for the images, WMA for audio, and XML for cell tower and GPS data. Any other sensor data is stored either as meta-data within the JPG or WMA file, or in a plain XML depending on what the source was.

How do you view your SenseCam data?

I do this in two ways. I make use of Windows Live Photo Gallery for just browsing and manual tagging, and I also make use of a small Python application, called PySenseCam that I created, which allows me to quickly play back images and data with audio snippets as though it were a slide show of my day.

How do you tag your data?

I am currently working on features for the PySenseCam application, making use of OpenCV and a few other Open Source projects that do rudimentary image detection, for automatically tagging images and determining significant events during my day.

I also use PySenseCam for manually tagging a group of images to indicate start and end times for significant events that are noteworthy.

Do you think the SenseCam will become ubiquitous?

I think a SenseCam-like device will eventually become ubiquitous, yes. The SenseCam in its current form is cumbersome and intrusive at times, and I believe that once the hardware engineering problems are solved, much like cell phones and computers, a large swathe of the population in the developed world will make use of such a device. I do not see this happening for at least a decade, possibly two, not until there is a compelling reason to do so, but I do believe it will happen.

One day, you will record your entire daily existence in high-definition video and audio, and on that day, we will all realise just how boring everybody else actually is. Oh, and porn, someone will make a porn movie using a SenseCam-like device in the next decade.

Got other questions I did not answer here? Please send them to me and I will add them to this article or if they are interesting enough, create an entirely new article based specifically around the questions and issues you raise.